Analogue vs Digital Scaled Radiation Monitors

Published: Apr 12, 2020

Analogue in a digital world?

We live in a high-tech world where digital is king. Yes, we still use things that move physically (car / washing machine), but their controls and parameters are either totally digital or digitally improved (even the steering wheel input of a modern car may take small measurements of movement and use that to ‘steer’ the car in ‘lane assist’ features).

When not involved in my day job I like to play, write and record music. I have a range of synthesisers which range from pure analogue, to hybrid, through to pure digital. Don’t get me started on that debate (!), which sounds ‘better’ is a hot topic. The recording system, a Digital Audio Workstation (DAW), runs on a computer and is pure digital. It’s interesting however that when looking at audio recording levels we tend to still rely on our eyes (and ears of course) and the preferred method is looking at an analogue display (digitally created moving meters). When we drive a modern car the speedo still tends to be analogue (even if its rendered graphically or the input is from a digital signal). In both these cases the eye tends to prefer the analogue display, and importantly is able to pick up ‘peaks’ and ‘average’ values far better than looking at a digital display of numerical of numbers.

Good old analogue radiation monitors

At the very beginning of my career in radiation protection I was introduced to the ‘Mini Monitor’. They came with various probes, but all came with a bright yellow box and analogue display (normally showing dose rate in micro Sv/h or counts per second as CPS). The Mini 900 D, which is still working (despite losing its control knob), is used for Ionactive training courses, and is shown below. Like other monitors of its vintage it has a nice analogue scale.

The great thing about this scale is at the low-end you have zero (nothing), < 0.5 micro Sv/h (nearly nothing) and < 1 micro Sv/h (a value with international legislative basis that implies a very low risk). Depending on the actual measurement environment, all of the values could represent values not measurable above background. Looked at another way, traditionally anything clearly < 1 micro Sv/h was often recorded as ‘Background’, ‘BG’ or ‘zero’. As with the audio level meters and speedo featured above, the eye is a good judge of average, trend and peak and it quickly can help make a decision.

Moving on to digital radiation monitors

I will state for the record that Ionactive has plenty of digital rate meters attached to all manner of monitoring probs. In addition, in most cases the digital monitor will have an ‘analogue display’ of some sort, something like the example shown below. The equipment suppliers will therefore argue that my implied preference for analogue is already taken care of. However, in my experience that ‘simulated’ analogue display is often ignored in preference to an ‘absolute’ digital value.

I have seen survey reports written up as '1.243 micro Sv/h'. What measurement value would you have recorded?

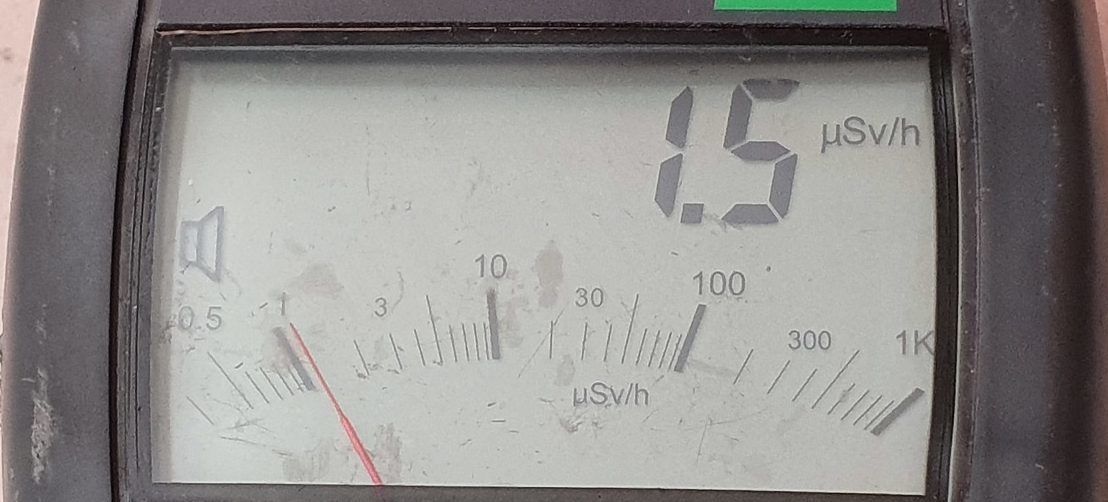

One manufacturer created the follow monitor some time ago which, in our opinion, makes the best of both analogue and digital (Ionactive has one of these). This monitor has a clear digital and analogue display, and the analogue information is provided by a traditional mechanical needle with the expected damping (of course the number scale itself is made by the LCD).

Here is a closer look at the T202 scale (the small CS-137 calibration source moved slightly, hence change in display value). Look at the analogue scale - how would you read it?!

Analogue vs Digital measurement values - does it really matter which?

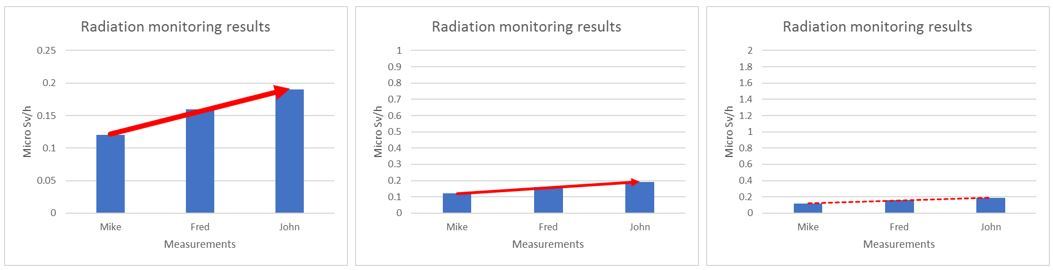

It should not really matter, other than perhaps for personal preference. However, during many site visits to radiation users it appears that the use of digital radiation meters can infer a level of accuracy and precision which is not reflective of the true value. In terms of accuracy we have seen monitoring results stated as 0.15 micro Sv/h or perhaps 100.5 CPS and recorded as such. Precision is of course independent of accuracy, but this too can cause unnecessary concern if you believe you digital monitoring result is accurate. For example, the true value might be 0.12 micro Sv/h and a measurement taken by worker Mike at 0.12 micro Sv/h might be considered accurate and precise. Then worker Fred takes a measurement at the same location at a later date and records 0.16 micro Sv/h. Finally, surveyor John then takes a measurement at the location and measures 0.19 micro Sv/h. We have seen these types of values recorded on official radiation survey reports – and in several cases the results are accompanied by a graph indicating ‘trend’. So, are the dose rates going up over time?!? Perhaps the scales used in any comparison might help? Is drawing a graph to show trend actually useful or valid in this example (probably not for occupational exposure in the workplace)?

Who cares?

One answer might be ‘who cares?’. A more professional answer might be - are these values accurate and precise? To answer this, we need to add context – for example, are these measurements being taken around an x-ray machine in an airport, or environmental monitoring around the perimeter of a nuclear power plant? I would suggest that if these results are obtained from surface dose rate measurements around the x-ray unit then they all represent background. In fact, a good analogue monitor with traditional needle would have shown substantially < 1micro Sv/h and probably reported correctly as ‘background’. Environmental monitoring around a nuclear power plant is a little different, in many cases such dose rates would be measured with long count times (so they are not instantaneous dose rates). Therefore, small fractional increases might be accurate and precise although I would suggest are still trivial when compared to natural background.

For general workplace monitoring of typical ionising radiation sources (radioactive gauges, x-ray cabinets, diagnostic x-ray wall shielding etc), values substantially less than 1 micro Sv/h ARE substantially less than anything to worry about – so perhaps just record background!

What about situations where higher dose rates might be expected (i.e. dose rates demonstrably greater than 1 micro Sv/h)? The same issues apply, which can be illustrated by considering a dose rate survey at the maze entrance of a radiotherapy treatment room.

A useful record of monitoring results might be (for example) 'about 1 micro Sv/h', '< 2 micro Sv/h', '< 5 micro Sv/h' (perhaps with a peak and an average over the area of the maze recorded). However, we often see (and are probably guilty as well on occasions) of reported results as 1.13 micro Sv/h (depending on monitor type). With a good analogue display (using an ion chamber if a linear accelerator is present) this could be recorded usefully as 1 micro Sv/h or perhaps no greater than 2 micro Sv/h. This is all that is required to calculate realistic exposure potential at the maze entrance – why not forget the decimal points!? In the above picture, the dose rate is 'about' 1 micro Sv/h.

Radiation monitor innovation - totally supported by Ionactive

Finally – I absolutely support innovation and its clear that modern radiation monitors are better than ever. Lower dead times, faster response, a flatter energy response over an extended range, filters to measure different radiations, single survey meters that can be connected to ‘intelligent’ probes for instant setup, data logging, Bluetooth connectivity, cleanable surfaces, background correction, etc. My main point of this post is perhaps rather coded – anything less than 1 micro Sv/h in many cases is trivial risk and effectively no greater than background. This was implicit in the older analogue monitors, the newer digital monitors may be better in every way, but their ‘indicated’ accuracy and precision very near background is not always helpful. This is where training comes in – you need radiation monitor training in order to correctly interpret and report radiation measurements.

When I deliver radiation monitor training I always try and get the delegates to initially concentrate on two aspects:

- How the monitor sounds. Audible ‘clicks’ many not always be that useful (e.g. in noisy workplaces or where you might not want to cause unnecessary alarm to those around you). However, I find that training your ears to ‘hear’ radiation (clicks) is a good way to appreciate increasing and decreasing count rate. In addition, when considering the inverse square law from a point source, the ear is very good at discerning rate of increase (as you move towards the source) and decrease (as you move away).

- Look at the analogue display before looking at the headline digital value. This is harder than you might think at first, but once you can ignore that for the moment you are better able to judge the rate of increase or decrease.

Using the above ideas, you can then ask the delegates to take measurements from a small radioactive calibration source using the same instrument. You first ask them to write down the digital value (every value will be different). You then get them to write down the analogue value and you find them all reporting about 1 micro Sv/h. Result !!